In this article we want to learn about Python Best Libraries for Web Scraping, Web scraping is a technique, and it is used to extract data from websites. data can be anything from text, images and videos to more structured information such as tables, lists and databases. Python provides different libraries and frameworks for web scraping, in this article we want to talk about some of them and create some basic examples.

What is Python Web Scraping ?

In Python web scraping can be done using different libraries such as BeautifulSoup, Requests and Selenium. using these libraries you can send HTTP requests to website and retrieve HTML content of the page and parse it to extract the information you need. and after scraping you can use these information’s for different purposes, such as data analysis, content aggregation and price comparison.

Python Best Framework for Web Scraping

These are list of some of the best Python frameworks for web scraping:

- Scrapy: It is An open source and high level framework for large scale web scraping projects. It provides an efficient and convenient way to extract data, and store it in structured format, also you can handle tasks such as request retries and concurrency.

- BeautifulSoup: It is Popular library for parsing HTML and XML content. Using that you can extract information from complex web pages, BeautifulSoup is good for smaller web scraping projects.

- Requests: It is a library for sending HTTP requests and handling the response. It can be used to retrieve the HTML content of website, You can use this library with other libraries such as BeautifulSoup.

- Selenium: It is a tool for automating web browsers. also it can be used for web scraping. this is library is useful when content is generated dynamically by JavaScript.

- Scrapylib: it is a library for building and running web scraping projects. it provides convenient way to handle tasks such as sending requests, following links and handling pagination.

- PyQuery: it is a library for making CSS selections in HTML content. It provides easy syntax for extracting information from web pages.

These are some of the most popular and widely used frameworks and libraries for web scraping in Python. the best choice for particular project will depend on different factors, including the size and complexity of the project, type of data you need to extract and the websites you are scraping.

What is Scrapy ?

Scrapy is an open source and high level framework for web scraping and data extraction in Python. It was specifically designed for large scale web scraping projects, and using that you can easily extract data from websites.

Scrapy provides different features, and it is one of the best choice for web scraping projects. these are some features:

- Request handling: Scrapy automatically handles sending requests to websites and it manages the responses, including retrying failed requests and handling concurrency.

- Data extraction: Scrapy provides easy way to extract information from HTML content of web pages using different selectors like CSS selectors or XPath expressions.

- Data storage: Scrapy provides built in support for storing extracted data in different formats including CSV, JSON, and XML.

- Crawling: Scrapy provides easy way to follow links and perform recursive crawling of websites.

How to Install Scrapy?

You can easily install Python Scrapy using pip, open command prompt or terminal, and write this command:

|

1 |

pip install scrapy |

Create First Project in Scrapy

This is a basic example of how to use Scrapy to scrape data from website:

Start new Scrapy project by running the following command in terminal or command prompt window:

|

1 |

scrapy startproject project_name |

Change into the newly created project directory and create new Scrapy spider:

|

1 2 |

cd project_name scrapy genspider example_spider website_domain |

Open spider file located in the spiders directory (e.g., example_spider.py) and add the following code:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

import scrapy class ExampleSpider(scrapy.Spider): name = "example" start_urls = [ 'http://www.example.com/page1.html', ] def parse(self, response): for quote in response.css("div.quote"): yield { 'text': quote.css("span.text::text").get(), 'author': quote.css("span small::text").get(), 'tags': quote.css("div.tags a.tag::text").getall(), } |

Run the spider by executing the following command:

|

1 |

scrapy crawl example_spider |

What is BeautifulSoup ?

BeautifulSoup is Python library, and that is used for web scraping. It allows you to parse HTML and XML documents and extract specific elements or data from them. BeautifulSoup provides methods to navigate, search and modify the parse tree of an HTML or XML document and it makes it useful tool for web scraping, data mining and data analysis. it is typically used in combination with other Python libraries such as requests or lxml, to handle the low level details of HTTP requests and parsing HTML or XML. you can install BeautifulSoup library using pip by running the following command in the terminal:

|

1 |

pip install beautifulsoup4 |

You may also need to install the lxml or html5lib parsers by running:

|

1 |

pip install lxml |

or

|

1 |

pip install html5lib |

You can use these libraries to parse the HTML or XML files.

Create First Project in BeautifulSoup

This is basic example of using BeautifulSoup library to parse HTML:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

from bs4 import BeautifulSoup html = '<html><body><h1>Hello World</h1><p>This is a paragraph.</p></body></html>' soup = BeautifulSoup(html, 'html.parser') # Extract the text from the h1 tag h1_tag = soup.find('h1') print(h1_tag.text) # Outputs "Hello World" # Extract the text from the p tag p_tag = soup.find('p') print(p_tag.text) # Outputs "This is a paragraph." |

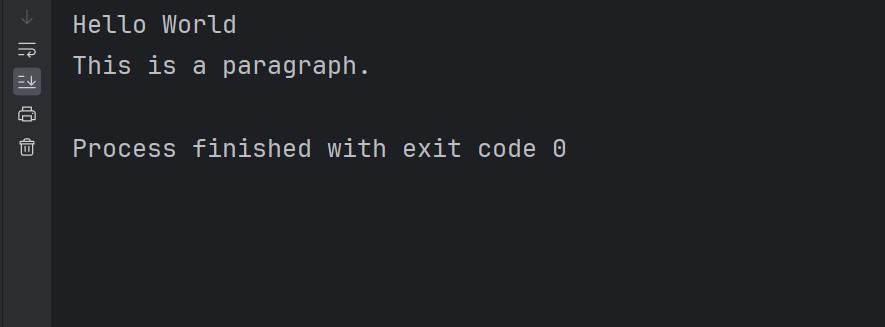

In this example first of all we have imported BeautifulSoup class from the bs4 module. after that we have created variable html which contains a simple HTML document. than we have created an instance of the BeautifulSoup class and pass in the HTML document and the parser we want to use. In this case we are using the built in ‘html.parser’. finally we use the find() method to search for specific tags and extract the text contained within them.

Run the code and this will be the result

What is Requests ?

Requests is Python library, and it is used for sending HTTP requests. It abstracts the complexities of making requests behind a simple API, and allows you to send HTTP/1.1 requests. Some features include:

- Connection pooling

- Keep-Alive

- Support for all HTTP method types, such as GET, POST, PUT, DELETE, etc.

- Built in support for authentication and encryption (SSL/TLS)

- Automatic decompression of response bodies

- Support for both synchronous and asynchronous programming

- Ability to send and receive JSON data easily

- Ability to work with cookies, sessions, etc.

- Robust error handling.

How to Install Requests in Python?

For installing Python Requests library, you can use the pip package manager by running the following command in your terminal or command prompt:

|

1 |

pip install requests |

Create First Example in Requests

This is basic example of making an HTTP GET request using the requests library:

|

1 2 3 4 5 6 |

import requests response = requests.get("https://www.example.com") print(response.status_code) print(response.content) |

In this exampl requests.get() sends GET request to the specified URL and the response is stored in the response variable. status_code attribute of the response indicates the HTTP status code returned by the server and the content attribute contains the response body.

What is Python Selenium ?

Selenium is web testing framework, using selenium you can automate web browsers. It is used for automating web application testing and it supports different types of browsers including Chrome, Firefox, Safari and Internet Explorer. Selenium provides a way to interact with web pages and their elements using programming languages such as Python, Java, C#, Ruby and JavaScript. It is widely used in web scraping, web automation and cross browser testing.

How to Install Python Selenium?

For installing Selenium you can use pip package manager by running the following command in your terminal:

|

1 |

pip install selenium |

You may also need to install a web driver, like ChromeDriver or GeckoDriver, in order to control a web browser through Selenium. you can download the appropriate web driver for your system from the official website and add its path to your system’s PATH environment variable.

What is Scrapylib ?

Scrapylib is library for Python that provides different functions for working with Scrapy, Scrapy is popular framework for web scraping and data extraction. Scrapylib is designed to complement Scrapy and provide additional functionality that makes it easier to work with the framework.

How to Install Scrapylib ?

You can install Scrapylib using pip., for installing Scrapylib, simply run the following command in a terminal or command prompt window:

|

1 |

pip install scrapylib |

Create First Example in Scrapylib

This is basic example of how to use Scrapylib to send an HTTP request and parse the response:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

import scrapylib # Send a GET request to a website response = scrapylib.http.get('http://www.example.com') # Check the status code of the response if response.status_code == 200: # Print the response body print(response.body) else: # Handle the error print("Error:", response.status_code) |

What is PyQuery ?

PyQuery is Python library for working with HTML and XML documents. it allows you to make queries against the document using a syntax that is similar to jQuery, a popular JavaScript library for working with HTML documents.

with PyQuery, you can easily parse an HTML or XML document, extract specific elements and their content, modify the document and many more.

How to Install PyQuery ?

You can install PyQuery using pip, for installing PyQuery simply run the following command in a terminal or command prompt window:

|

1 |

pip install pyquery |

Create First Example in PyQuery

This is basic example of how to use PyQuery to parse an HTML document and extract information:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

from pyquery import PyQuery as pq # Load an HTML document html = ''' <html> <head> <title>Example Page</title> </head> <body> <h1>Hello, World!</h1> <p>This is a simple example page.</p> </body> </html> ''' # Create a PyQuery object from the HTML doc = pq(html) # Extract the title of the page title = doc('head > title').text() print("Title:", title) # Extract the h1 element and its content h1 = doc('body > h1').text() print("H1:", h1) # Extract the p element and its content p = doc('body > p').text() print("P:", p) |

FAQs:

Which Python library is best for web scraping?

The choice of the best Python library for web scraping depends on different factors such as complexity of the task, personal preferences, and specific requirements. but we can say that there are two popular libraries that we can use for web scraping in Python. the first one is Scrapy and the second one is BeautifulSoup. now which one choose from these two ? if you have simpler scraping tasks, then BeautifulSoup is an excellent choice, but if you have complex scraping projects, then Scrapy will be the best choice, because Scrapy is a powerful and extensible framework specifically designed for more complex scraping projects.

Is Python best for web scraping?

Python is one of the best programming languages for web scraping, because it is simple, easy syntax, and rich ecosystem of libraries and frameworks specifically designed for this purpose. Python offers powerful libraries like BeautifulSoup, Scrapy and requests, you can use these libraries for different web scraping projects, you can use Python for simple and complex web scraping projects.

Is Scrapy better than BeautifulSoup?

Scrapy and BeautifulSoup are both popular tools for web scraping in Python, but they serve different purposes and have different strengths. BeautifulSoup is a library specifically designed for parsing HTML and XML documents and extracting data from them. It is good for simpler scraping tasks and offers easy interface for navigating and searching through HTML/XML documents.

On the other hand, Scrapy is one the best and powerful web scraping framework for big and complex projects. It offers features such as asynchronous requests, built- n support for XPath selectors, and a powerful system for managing spiders and pipelines.

Subscribe and Get Free Video Courses & Articles in your Email