In this Flask article we are going to learn about Web Scraping in Flask , so as you know that we are located in the age of information, and data is the heart of information, so if you want to gather data, than web scraping is one of the best tools for extracting data from websites. on the other hand Flask is one of the popular Python Web Framework. in this article we want to talk about Flask web scraping.

What is Flask ?

Flask is lightweight and easy web framework that allows you to build web applications in Python. it provides simple but powerful foundation for creating scalable and modular applications.

So web scraping involves automatically extracting data from websites by parsing the HTML or XML content of web pages. using web scraping we can collect information from different sources like news articles, product listing or social media profiles.

Before starting our web scraping, let’s create our Flask application. first of all you need to install Flask and for that you can use pip. after that Flask installed you can create a basic Flask application with just a few lines of code.

|

1 2 3 4 5 6 7 |

from flask import Flask app = Flask(__name__) @app.route('/') def hello_world(): return 'Welcome to codeloop.org' |

This is simple Flask application that creates a route for the root URL (“/”) and returns a simple greeting message.

For performing web scraping in a Flask application, we need to use a web scraping library such as BeautifulSoup or Scrapy. These libraries provides powerful tools for parsing and extracting data from HTML or XML documents.

First of all we need to install this library and we can use pip for that.

|

1 |

pip install beautifulsoup4 |

Now this is the complete code for this article

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

from flask import Flask from bs4 import BeautifulSoup import requests app = Flask(__name__) @app.route('/') def hello_world(): return 'Welcome to codeloop.org' @app.route('/scrape') def scrape_website(): headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36' } try: # Make request to the target website with custom headers response = requests.get('https://codeloop.org/', headers=headers) # Check if the request was successful if response.status_code == 200: # Parse the HTML content using BeautifulSoup soup = BeautifulSoup(response.content, 'html.parser') # Find the desired element and extract its text content (if found) target_element = soup.find('div', class_='entry-summary') if target_element: data = target_element.get_text().strip() # Strip any extra whitespace return data else: return 'No data found on the website' else: return f'Failed to retrieve website data: {response.status_code}' except requests.RequestException as e: return f'An error occurred while trying to retrieve the website data: {str(e)}' if __name__ == '__main__': app.run(debug=True) |

In the above code, we have used BeautifulSoup find method to locate the <div> element with the class name entry-summary, which corresponds to the article summary of the latest blog post on the website. we extract the text content of this element using get_text() and strip any leading or trailing whitespace.

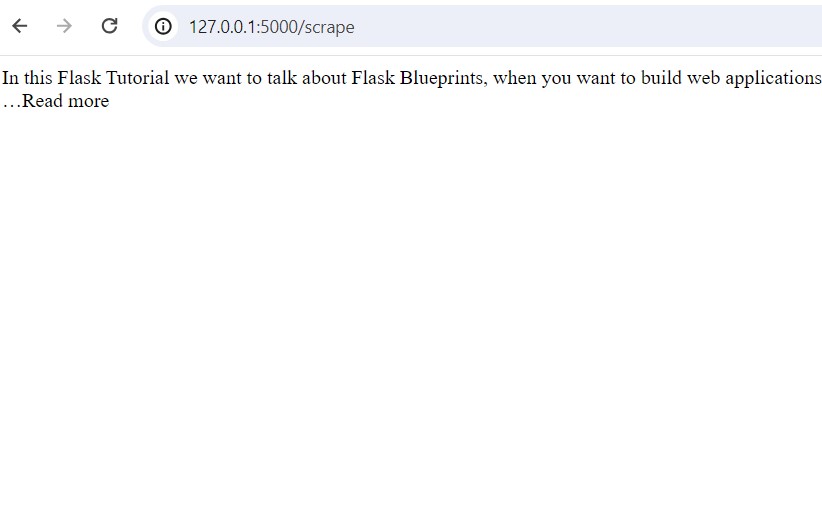

Run the code and go to http://127.0.0.1:5000/scrape, this will be the result

Subscribe and Get Free Video Courses & Articles in your Email